Homelab - Hardware and Layout

Table of Contents

I’ve got (what I think) is a fairly substantial, though definitely aging, homelab. I thought I’d write a bit of a post on what it consists of.

Hardware

Current setup:

- 3x ESXI hosts - each are a 1U Supermicro server with 192GB memory on a X9DRI-LN4F+ motherboard

- 1x Network storage server - A 2U Supermicro, 64GB of memory, X9DRI-LN4F+ motherboard

- Intel Xeon CPU E5-2650 2.00GHz CPUs (old!)

- 1x Mikrotik 24 port CRS326-24S+2Q+RM

- 1x Mikrotik 24 port CRS326-24G-2S+RM

- Battery backup - Cyberpower 1500VA

- Firewall - Protectli Vault 6 Port running OpenBSD

Extra hardware:

- Juniper EX3300

- Many server components: disks, motherboards, memory, etc

Storage Server

This is an Ubuntu 18.04 server using ZFS on linux, with four mirrored spinning disks and a zlog disk. Brilliantly and originally I’ve named the zpool tank.

$ zpool status

pool: tank

state: ONLINE

scan: scrub repaired 0B in 23h24m with 0 errors on Sun May 8 23:48:18 2022

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

wwn-0x5000cca221c07016 ONLINE 0 0 0

wwn-0x5000cca221c8e492 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

wwn-0x5000cca221c8e026 ONLINE 0 0 0

wwn-0x5000cca221db40d0 ONLINE 0 0 0

logs

wwn-0x55cd2e404c0f5d34 ONLINE 0 0 0

Also I have a NVMe disk that has 1.8T of storage, which is setup with XFS. I’ve called this one Mammoth. I’m surprised this drive is still working as it’s just a Western Digital Blue disk that I thought would quickly wear out, but it’s still going strong. I put it into a generic PCI adapter and it’s been working well…so far (though again, I expect it to fail at some point).

$ mount | grep xfs

/dev/nvme0n1 on /mammoth/1 type xfs (rw,relatime,attr2,inode64,noquota)

The ZFS and XFS storage is exported via NFS to the ESXI hosts.

ESXI Hosts

Not much special here, just some older 1U nodes. vCenter is running as a VM on these hosts. The E5-2650 CPUs likely won’t work with vSphere 8.

Each node also has a 1TB SSD drive in it, but I don’t use these much. If I’m doing a nested deployment of vSphere, I’ll put the nested, virtualized ESXI hosts on these disks, and manually distribute them across the three hosts, but otherwise I don’t currently use them.

Initially I used inexpensive flash USB drives to run the ESXI OS, but that stopped working and I had to install ESXI onto local drives, which right now are spinning disks that I had been using when I was testing out VSAN.

Networking

Mikrotik

At this time, for the lab, I’m using Mikrotik network switches, mostly because they are extremely low power and incredibly quiet. Two switches is like 1/3 the watts of another vendor’s single switch.

I have several other switches that could be in place, for example a Juniper EX3300 with 24x 1GB ports and 4x 10GB ports, but while it has better performance, it’s louder and adds another amp of power usage.

NOTE: Please take a look at the performance testing for Mikrotik switches and note the switching performance. They might not work for you. :)

[admin@MikroTik] > /system resource print

uptime: 4w2h33m31s

version: 6.42.12 (long-term)

build-time: Feb/12/2019 08:23:13

factory-software: 6.41

free-memory: 480.5MiB

total-memory: 512.0MiB

cpu: ARMv7

cpu-count: 1

cpu-frequency: 800MHz

cpu-load: 0%

free-hdd-space: 3896.0KiB

total-hdd-space: 16.0MiB

write-sect-since-reboot: 53897

write-sect-total: 102370

bad-blocks: 0%

architecture-name: arm

board-name: CRS326-24G-2S+

platform: MikroTik

The 1GB switch is doing all the routing, the VLAN gateways live here. As well, it does DHCP for the services that need it.

Configuring the Mikrotiks is a bit unusual compared to other major switch vendors, e.g. Juniper. There’s no commit/rollback model.

[admin@MikroTik] > /interface bridge port print

Flags: X - disabled, I - inactive, D - dynamic, H - hw-offload

# INTER... BRIDGE HW PVID PR PATH-COST INTERNA... HORIZON

0 H ;;; defconf

ether1 bridge yes 1 0x 10 10 none

SNIP!

The bridge model is a bit unusual as well, note the “HW: yes” column.

Juniper EX3300

My EX3300 would likely make more sense as a switch in this environment, as I would just need the single switch as it has the four 10 gig connections (perfect for me with my four nodes), and it’s wire speed, but ultimately I just liked that the Mikrotiks are quieter and lower power, and, so far, I haven’t run into any performance problems (that I’m aware of).

I’ve had both the EX3300 and the Mikrotiks in place in different versions of the lab. If performance was a key, then I would definitely use the EX3300, and accept the additional volume and power use. Honestly, the EX3300 is the perfect lab switch, but I’m not using it right now.

Next time I rebuild the lab, I might use the EX3300. :)

Firewall

I run a small fanless OpenBSD based firewalling device that has six interfaces that I used to segregate my various home networks. I’m an OpenBSD fan, so I’m always looking for a spot to put some OpenBSD.

NOTE: This device runs really hot. I’ve read that some people change the thermal paste on these kinds of systems, though I have not done that. I’m expecting this device to fail at some point just due to being so high temperature all the time. Perhaps it was not a wise investment. That remains to be seen.

Six interfaces sounds like a lot, but if you’re physically separating networks out, it’s just the right amount.

# uname -a

OpenBSD firewall 6.9 GENERIC.MP#473 amd64

# ifconfig | grep em

em0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> mtu 1500

em1: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> mtu 1500

em2: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> mtu 1500

em3: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> mtu 1500

em4: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> mtu 1500

em5: flags=8802<BROADCAST,SIMPLEX,MULTICAST> mtu 1500

Only certain networks are allowed to talk to other networks: basic segregation.

Battery Backup

Power goes off in Toronto fairly regularly, one every three or four months, but usually it’s only a blip…maybe a minute or two of power failure. For a while I just kept restarting everything, but finally I experienced a corruption issue and had to rebuild, so it was time for a battery backup. Better to spend a couple hundred dollars than the time rebuilding and restarting. With this in place my nodes have not gone down once, though it would only last for maybe 10, 15 minutes, so if it’s an extended power loss, then everything will go down.

DNS, NTP - Laptop

I use an old IBM laptop for DNS and NTP. Because, as a laptop, it has a battery in it, this laptop has been up for a long, long time…years in fact:

$ uptime

21:26:05 up 952 days, 23:24, 2 users, load average: 0.00, 0.00, 0.00

That’s right, 952 days. Insecure, yes, but wow, this is some serious uptime. The screen has stopped working, but I can still ssh in. I don’t know how this thing is still working, but at this point I have to leave it just to see how long it will continue to stay up!

Environment

All these servers and switches are installed into a medium sized enclosed rack that I bought off of Kijiji. I don’t run any air conditioning at all, and these servers live in my cold room, which isn’t that cold, and can easily be 30C or higher in the summer, but the whole system just keeps chugging along. It’s also dusty in the basement, and again, things–surprisingly–just keep working. Maybe once a year I shut most things down and clean everything off.

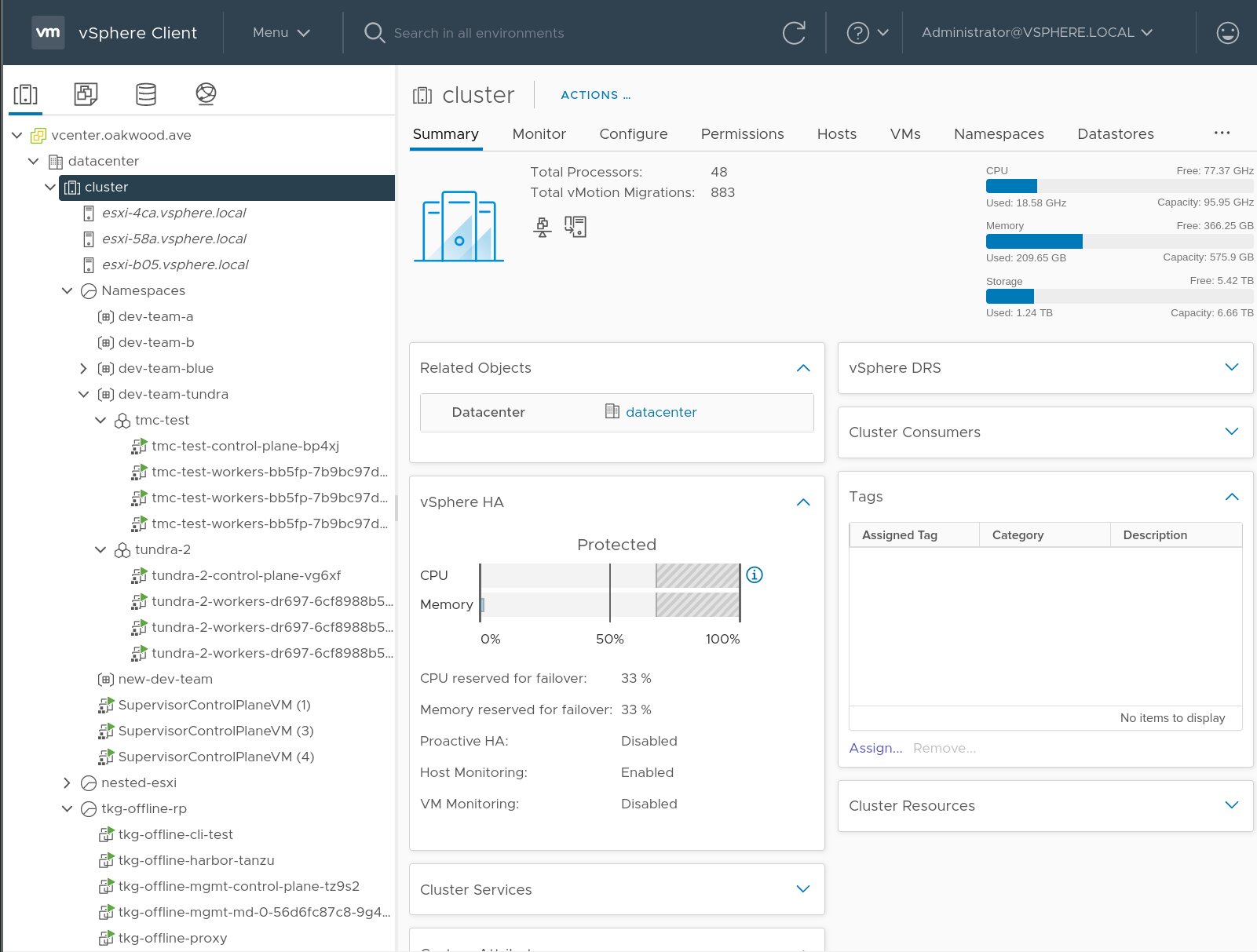

What is this lab running?

- vSphere 7

- vSphere with Tanzu, using NSX Advanced Loadbalancer (AVI)

- Tanzu Kubernetes Grid - internet restricted and non-restricted deployments

- Many Kubernetes clusters (thanks to TKG and vSphere with Tanzu)

- Used to run NSX, but it’s currently not deployed in this version of the lab

Conclusion

This all uses 6 or 7 AMPs of power and has been running for well over three years. The Supermicro’s just keep running, no matter how dusty or hot. One major issue is that if I continue to run a vSphere lab, it’s unlikely the CPUs in these nodes will be supported. So to continue with vSphere 8 would be a major investment.