A Look at the Model Context Protocol

Table of Contents

What do these lazy AI systems do? Not a whole heck of a lot without tools and resources. Use the model context protocol to give them a virtual jolt of caffeine!

⚠️ Please note that MCP is moving historically fast! What I wrote here today, is likely not what you will find on the MCP website tomorrow. I will update this post as needed, but please check the MCP website for the most current information.

Introduction

AI is changing the way we build applications, the way we code, the way we do a lot of things. For me, the recent advances in AI are fascinating because AI is neither really good at everything nor really bad at everything. It’s like Schrödinger’s Cat: it’s both amazing and kind of ridiculous at the same time, existing in two worlds at once. Having said that, we, the royal “we”,are going to use AI, for better or for worse.

However, we are still figuring out how best to use AI to solve problems. One of the things that makes humans unique is our ability to use tools. Can AI use tools? Without help…no. Most chatbots can’t just call up an API and take real action. It’s typically limited to producing text of some kind.

Enter the Model Context Protocol, or MCP for short, a protocol that allows AI models to use tools to take action.

What is a Model Context Protocol?

The Model Context Protocol is an open standard that enables developers to build secure, two-way connections between their data sources and AI-powered tools. The architecture is straightforward: developers can either expose their data through MCP servers or build AI applications (MCP clients) that connect to these servers. - Anthropic

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools. - MCP

What’s the Value of MCP?

…for our example application, MCP would potentially reduce our workload because we wouldn’t need to write three custom AI integrations. Instead, we’d need only write the weather server once, then deploy to interact with all MCP-compatible clients. - Willow Tree

Instead of maintaining separate connectors for each data source, developers can now build against a standard protocol. As the ecosystem matures, AI systems will maintain context as they move between different tools and datasets, replacing today’s fragmented integrations with a more sustainable architecture. - Dappier

MCP addresses these and other issues:

-

Models have specific cut-off dates beyond which they cannot provide reliable information, limiting their response to knowledge available up to their cut-off date and requiring transparent acknowledgement of these limitations.

-

Models often don’t have access to real-time data. All responses must be generated solely from information within the model’s training data or context window, without the ability to verify online information or validate sources in real time.

-

Lack of agentic capabilities. On their own, models are not capable of taking any action other than providing a textual response. Without MCP, or something similar, models are unable to take the actions we need to make them useful.

Current Limitations: Local Only, Require Approval

Right now, MCP is only supported locally - servers must run on your own machine. But we’re building remote server support with enterprise-grade auth, so teams can securely share their context sources across their organization. - Alex Albert

Crucially, as of today, 3 March 2025, MCP “servers” can really only be used locally! Certainly there are some qualifications there, but for a remote server (which, frankly, is what I consider a server in this context) to be useful, it must be able to authenticate requests from the LLM client. But this is currently not completely practical, there is a draft specification for it though, so I expect improvements soon! Then again, if we think about this as a purely plugin based system, then it is maybe not such a major limitation, we just need to install the plugins from where ever the tool/agent is running.

Note that currently the Inspector tool can do Server Side Events (SSE), all though I haven’t tested it.

MCP Transport Types

Note: These are the two main transport methods currently supported by MCP for client-server communication.

- Standard Input/Output (stdio) - The stdio transport enables communication through standard input and output streams. This is particularly useful for local integrations and command-line tools.

- Server-Sent Events (SSE) - SSE transport enables server-to-client streaming with HTTP POST requests for client-to-server communication.

E.g. stdio diagram for my particular use case, where I have Docker running the MCP server.

Further to this, when using Anthropic, one can only use MCP servers with Claude Desktop (which only runs on Windows or MacOS, by the way).

As well, currently, tool use via LLMs typically requires human approval, but not having the approval can enable potentially dangerous operations. Difficult to determine risk level acceptance here.

What Does an MCP Server Look Like?

Anthropic has created guides for many languages which can be found in their main repository. For Python specifically, they provide a Python SDK. Additionally, there is a growing list of community-created servers available as well.

Let’s take a quick look at a simple example of an MCP server written in Python.

First: Resources, Tools, and Prompts

Claude Desktop can use multiple sources of information and capabilities via MCP, and here we’ll show using one resource and a simple adding tool.

| Component | Description |

|---|---|

| Resources | Represent static data or information that the LLM can access as context, like a database record or a file, essentially providing “read-only” data for the model to reference. |

| Tools | Executable functions that the LLM can call to perform actions or retrieve dynamic information, allowing the model to actively interact with external systems like making API calls or calculations. |

| Prompts | Structured templates that guide the LLM interaction by providing a standardized format for user input and expected output, acting like a reusable interaction pattern. |

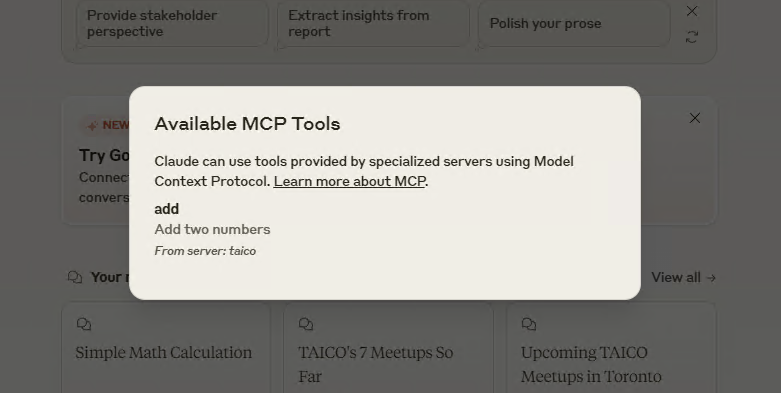

I work with an organisation called TAICO and we publish an XML feed of our articles and events. I have created a simple MCP server to retrieve the events in the feed, so that I can ask Claude about the events, to analyse them, etc. So, to be clear, this is not a tool, but rather a resource that provides up-to-date information to the LLM. That said, the “add” tool is also deployed, and from that we can see that the LLM could have access to almost any function.

Creating a MCP Server

Following https://modelcontextprotocol.io/quickstart/server.

First, get uv.

pipx install uv

Now, create a new directory for your project.

uv init taico-feeds

cd taico-feeds

# Create virtual environment and activate it

uv venv

source .venv/bin/activate

# Install dependencies

uv add "mcp[cli]" httpx

# Create our server file

touch taico-feeds.py

Now, edit the taico-feeds.py file to add something like the following code, where I have removed some of the code for brevity.

📝 I then put this into a docker container, and that was how Claude Desktop could use it, by executing the container. I am not a Windows developer, and Claude Desktop only runs on Windows or MacOS. However, Claude Desktop can launch a Docker container on Windows, which is easier for me as I can write the code on Linux and publish the container image. For those of you who develop on Windows, you would have a much simpler time of it and could just use mcp to run it locally.

from mcp.server.fastmcp import FastMCP

import requests

import xml.etree.ElementTree as ET

from typing import List, Dict, Any

# Create an MCP server

mcp = FastMCP("Taico Feeds")

# Add an addition tool

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

@mcp.tool()

def sha256(text: str) -> str:

"""Get the SHA256 hash of a text"""

print(f"Getting SHA256 hash of text {text}")

try:

return hashlib.sha256(text.encode('utf-8')).hexdigest()

except Exception as e:

return f"Failed to get SHA256 hash of text {text}: {str(e)}"

# Add a resource to fetch events from Taico feed

@mcp.resource("taico://events")

def get_events() -> List[Dict[str, Any]]:

"""

Fetch events from the Taico feed.

Returns a list of events where the category term is 'event'.

Each event contains title, link, published date, content, and other metadata.

"""

url = "https://taico.ca/feed.xml"

<code removed for brevity>

# Run the server when the script is executed directly

if __name__ == "__main__":

mcp.run()

Above there’s a couple tools, add, and sha256. The add tool is a simple tool that adds two numbers together. The sha256 tool is a tool that takes a string and returns the SHA256 hash of that string.

Example Claude Desktop configuration to launch the container.

📝 The container I use in this example is currently not public, but this shows how you could run an MCP server via Docker. Claude Desktop does not have to be able to pull the container image from a private registry, in this case I pre-pulled it, and then Claude Desktop can start up the container via the command setup in the configuration.

{

"mcpServers": {

"taico": {

"command": "docker",

"args": ["run", "-i", "--rm", "--init", "-e", "DOCKER_CONTAINER=true", "ghcr.io/ccollicutt/taico-mcp-server:main"]

}

}

}

Debug Mode and Inspector

While developing, you can use the mcp command to start the server and run it in debug mode. This will also start the MCP inspector, which is a tool that allows you to inspect the server and the resources and tools it has available.

$ mcp dev taico-feeds.py

Need to install the following packages:

@modelcontextprotocol/inspector@0.4.1

Ok to proceed? (y) y

Starting MCP inspector...

Proxy server listening on port 3000

🔍 MCP Inspector is up and running at http://localhost:5173 🚀

Results

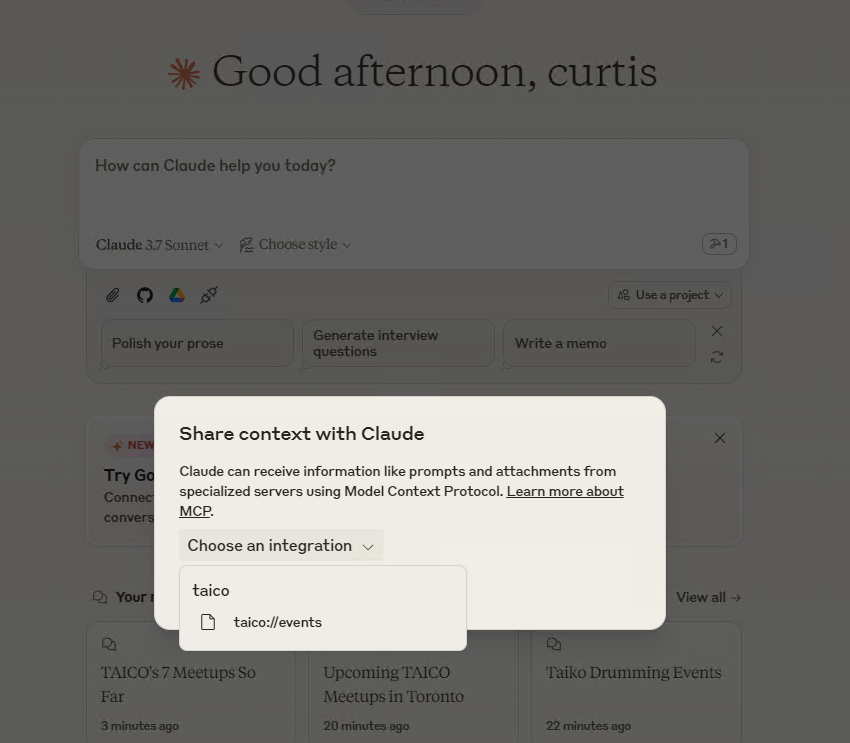

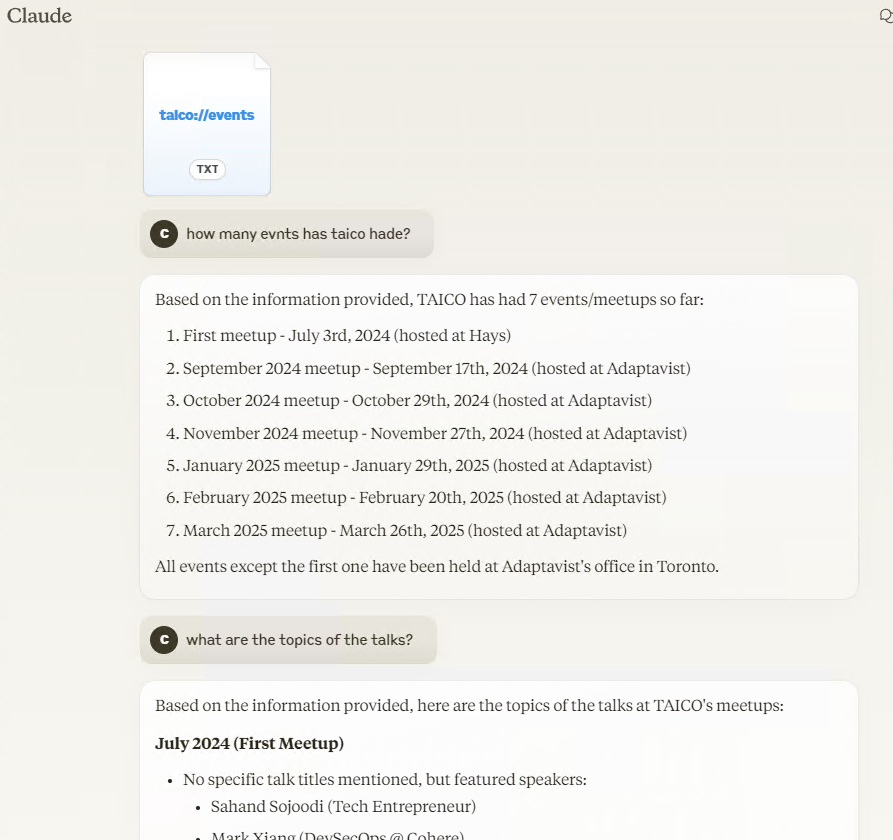

Once I’ve edited the Claude Desktop configuration, and restarted it, I see that I have a new taico://events resource, as well as a add tool.

📝 Notice that I never spell anything right when chatting with an LLM, it always figures it out. Why spend time retyping? :)

If I select that, now I have a chat with Claude that has access to the Taico Feeds resource. What’s more, we can ask Claude about those events, what they were, what happened, what kind of talks–anything we want. Claude now has the data to analyse and provide a response.

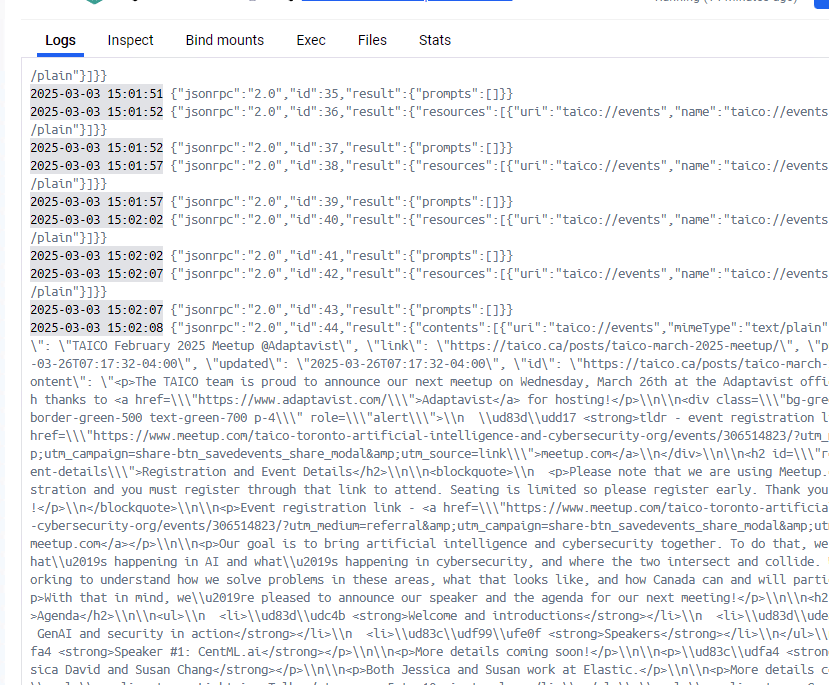

In the Docker Desktop logs, I see that the MCP server is running.

As well, we can see that there is a simple adding tool available.

Other Tools

In this blog post I’ve just used Claude Desktop, but there are other tools that support MCP, e.g. Cursor and mcp-agent among many more, with more coming all the time. So I don’t want to leave you with the impression that this is only for Claude Desktop. Likely every AI tool/agent will provide support for MCP, and perhaps other similar standards that emerge as well.

Specification

There is a specification in the works for MCP:

Conclusion

It’s hard to say whether MCP will be a winner or not. If at some point we can run a remote MCP server and it uses authentication, then it will be extremely useful, perhaps undeniably so–something has to provide this capability. But at the moment it is a local-only solution. Having said that, I definitely see a future where MCP is successful and we all use it every day to make our generative AI applications more useful. It’s a good bet that learning to build MCP servers will be a valuable skill in the future.

The future includes all kinds of fun stuff like authentication, remote servers, discoverabiltiy, self-improvement, registries, and more.

⚠️ This post only covered the most basic concepts around MCP, and there is a lot more to it that I will hopefully cover in future posts. Otherwise, I've added a few links to further reading below.

Further Reading

- Marketplace for MCP server: https://www.mcp.run/

- https://www.docker.com/blog/the-model-context-protocol-simplifying-building-ai-apps-with-anthropic-claude-desktop-and-docker/

- https://www.willowtreeapps.com/craft/is-anthropic-model-context-protocol-right-for-you

- https://www.chriswere.com/p/anthropics-mcp-first-impressions

- https://www.philschmid.de/mcp-example-llama

- https://www.youtube.com/watch?v=sahuZMMXNpI

- https://salesforcedevops.net/index.php/2024/11/29/anthropics-model-context-protocol/

- https://medium.com/@richardhightower/setting-up-claude-filesystem-mcp-80e48a1d3def

- https://glama.ai/blog/2024-11-25-model-context-protocol-quickstart